In case you missed it (ICYMI) Meta announced SAM2 yesterday! ![]()

-

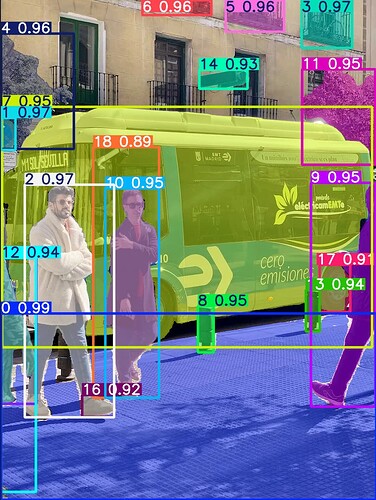

SAM 2 extends its predecessor by incorporating video segmentation capabilities, using a transformer architecture with streaming memory for real-time processing, and is trained on the new SA-V dataset. Paper, Project, Demo, Dataset, Blog

-

The development of SAM 2 was driven by the need for a foundation model capable of prompt-based visual segmentation across a broad spectrum of tasks and visual domains, enabled by a model in the loop data engine that enhances both model and data through user input.

Well you know ![]() Ultralytics is going to be working on integrating SAM2 into our python library! Check out the new Docs page! Keep an eye out for more updates.

Ultralytics is going to be working on integrating SAM2 into our python library! Check out the new Docs page! Keep an eye out for more updates.

What are you most excited regarding SAM2?