python

Hello. Please help me.

Toxite

April 14, 2025, 8:00pm

3

Can you post the training command?

def train_model(

try:

results = model.train(

data=data,

epochs=epochs,

batch=batch,

imgsz=imgsz,

patience=patience,

resume=resume,

)

except torch.OutOfMemoryError:

raise

# torch.cuda.empty_cache()

# train_model(

# data=data,

# epochs=epochs,

# batch=batch,

# imgsz=imgsz,

# patience=patience,

# resume=True,

# counter=counter,

# )

file_name = f'results_{epochs}_epochs_{imgsz}_imgsz.txt'

with open(file_name, 'a+') as f:

f.write(f'{batch}. {str(results.class_result(0))}\n')

train_model(

data='datasets/earrings_data.yaml',

epochs=100,

batch=16,

imgsz=96 * 7,

patience=0,

resume=False,

counter=1,

)

Simple code without difficult steps.

Toxite

April 15, 2025, 12:08am

7

What was the version of Ultralytics you used that didn’t have the issue?

Sorry. I don’t know. I would like to know this myself.

I installed this to be around February 2025.

Train log before error.

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

1/100 3.78G 1.008 2.661 1.336 57 672: 100%|██████████| 13/13 [00:03<00:00, 3.82it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 4.06it/s]

all 206 400 0.94 0.946 0.979 0.911

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

2/100 3.89G 0.5729 0.7992 1.028 63 672: 100%|██████████| 13/13 [00:03<00:00, 4.01it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.06it/s]

all 206 400 0.806 0.75 0.84 0.625

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

3/100 3.92G 0.6352 0.7481 1.048 39 672: 100%|██████████| 13/13 [00:03<00:00, 4.03it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.20it/s]

all 206 400 0.216 0.39 0.165 0.0441

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

4/100 4.02G 0.7442 0.7684 1.109 53 672: 100%|██████████| 13/13 [00:03<00:00, 4.04it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.24it/s]

all 206 400 0.405 0.422 0.387 0.174

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

5/100 4.09G 0.7166 0.7191 1.065 57 672: 100%|██████████| 13/13 [00:03<00:00, 4.05it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.19it/s]

all 206 400 0.47 0.863 0.662 0.415

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

6/100 4.15G 0.6953 0.6695 1.078 43 672: 100%|██████████| 13/13 [00:03<00:00, 4.04it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.18it/s]

all 206 400 0.495 0.647 0.498 0.262

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

7/100 4.21G 0.7525 0.6823 1.071 63 672: 100%|██████████| 13/13 [00:03<00:00, 4.06it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 4.98it/s]

all 206 400 0.565 0.64 0.493 0.231

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

8/100 4.28G 0.6851 0.5854 1.048 58 672: 100%|██████████| 13/13 [00:03<00:00, 4.06it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.22it/s]

all 206 400 0.0775 0.135 0.0471 0.0121

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

9/100 4.33G 0.6763 0.5711 1.059 54 672: 100%|██████████| 13/13 [00:03<00:00, 4.06it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.27it/s]

all 206 400 0.0838 0.155 0.0277 0.00727

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

10/100 4.41G 0.666 0.5589 1.045 67 672: 100%|██████████| 13/13 [00:03<00:00, 4.06it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.21it/s]

all 206 400 0.203 0.318 0.197 0.0654

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

11/100 4.46G 0.6612 0.55 1.04 41 672: 100%|██████████| 13/13 [00:03<00:00, 4.06it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.30it/s]

all 206 400 0.0164 0.198 0.0115 0.00232

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

12/100 4.52G 0.6715 0.5809 1.041 60 672: 100%|██████████| 13/13 [00:03<00:00, 4.06it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.22it/s]

all 206 400 0.527 0.48 0.552 0.36

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

13/100 4.6G 0.6548 0.5535 1.047 64 672: 100%|██████████| 13/13 [00:03<00:00, 4.07it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 5.23it/s]

all 206 400 0.293 0.26 0.194 0.036

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

14/100 4.63G 0.5743 0.5356 1.016 66 672: 8%|▊ | 1/13 [00:00<00:04, 2.91it/s]

Traceback (most recent call last):

I think it all depends on the sample size. It didn’t depend before. I tried different cache settings, didn’t help.

At now i install Ultralytics 8.3.40 and all works fine. Any ideas?

Toxite:

8.3.104

8.3.104 with memory leak.

Trying to find problem version.

Problem comes with ultralytics-8.3.87.

I install latest version ultralytics==8.3.108 and change

1 Like

Thanks for the feedback here! We’ve just opened a new PR to improve this:

main ← train-memory

opened 12:01PM - 15 Apr 25 UTC

Closes #19862

https://community.ultralytics.com/t/gpu-memory-leak/967/16

Us… ers have reported OOM issue.

## 🛠️ PR Summary

<sub>Made with ❤️ by [Ultralytics Actions](https://github.com/ultralytics/actions)<sub>

### 🌟 Summary

Improves memory management during training by clearing memory more frequently.

### 📊 Key Changes

- Lowers the memory usage threshold for clearing memory from 90% to 50% during training.

### 🎯 Purpose & Impact

- 🧹 Reduces the risk of running out of memory, especially on devices with limited resources.

- 🚀 Helps maintain smoother and more stable training sessions for users.

- 💻 Makes YOLO training more reliable across a wider range of hardware.

DFK1991

September 9, 2025, 12:39pm

21

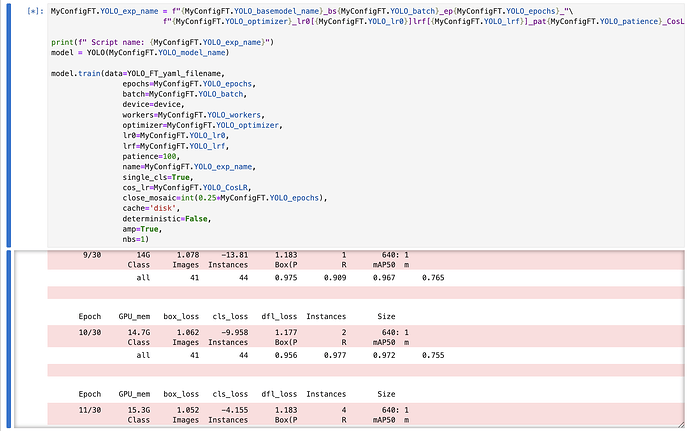

@MaestroV thank you so much for sharing your findings with us! I experienced exactly the same issue but things I tried before finding this thread did not help (screenshot attached), while other forums did not suggest anything informative.